Op-Ed by Matthew Feeney

Op-Ed by Matthew Feeney

Twitter recently re-activated Jesse Kelly’s account after telling him he was permanently banned from the platform. The social media giant informed Kelly, a conservative commentator, that his account was permanently suspended “due to multiple or repeat violations of the Twitter rules.” Conservative pundits, journalists, and politicians criticized Twitter’s decision to ban Kelly, with some alleging that his ban was the latest example of perceived anti-conservative bias in Silicon Valley.

While some might be infuriated with what happened to Kelly’s Twitter account, we should be wary of calls for government regulation of social media and related investigations in the name of free speech or the First Amendment. Companies such as Twitter and Facebook will sometimes make content moderation decisions that seem hypocritical, inconsistent, and confusing. But private failure is better than government failure, not least because—unlike government agencies—Twitter has to worry about competition and profits.

A Lack of Consistency

It’s not immediately clear why Twitter banned Kelly. A fleeting glance of Kelly’s Twitter feed reveals plenty of eye roll-worthy content, including his calls for the peaceful breakup of the United States and his assertion that only an existential threat to the United States can save the country. His writings at the conservative website The Federalist include bizarre and unfounded declarations such as, “barring some unforeseen awakening, America is heading for an eventual socialist abyss.” In the same article, he called for his readers to “Be the Lakota” after a brief discussion about how Sitting Bull and his warriors took scalps at the Battle of Little Bighorn. In another article, Kelly made the argument that a belief in limited government is a necessary condition for being a patriot.

I must confess that I didn’t know Kelly existed until I learned the news of his Twitter ban, so it’s possible that those backing his ban from Twitter might be able to point to other content they consider more offensive than what I just highlighted. But from what I can tell, Kelly’s content hardly qualifies as suspension-worthy.

Some opponents of Kelly’s ban (and indeed Kelly himself) were quick to point out that Nation of Islam leader Louis Farrakhan still has a Twitter account despite making anti-Semitic remarks. Richard Spencer, the white supremacist president of the innocuously-named National Policy Institute who pondered taking my boss’ office, remains on Twitter, although his account is no longer verified.

Private Speech Standards

All of the debates about social media content moderation have produced some strange proposals. Earlier this year I attended the Lincoln Network’s Reboot conference and heard Dr. Jerry A. Johnson, the president and chief executive officer of the National Religious Broadcasters, propose that social media companies embrace the First Amendment as a standard. Needless to say, I was surprised to hear a conservative Christian urge private companies to embrace a content moderation standard that would require them to allow animal abuse videos, footage of beheadings, and pornography on their platforms. Facebook, Twitter, and other social media companies have sensible reasons for not using the First Amendment as their content moderation lodestar.

Rather than turning to First Amendment law for guidance, social media companies have developed their own standards for speech. These standards are enforced by human beings (and the algorithms human beings create) who make mistakes and can unintentionally or intentionally import their biases into content moderation decisions. Another Twitter controversy from earlier this year illustrates how difficult it can be to develop content moderation policies.

Shortly after Sen. John McCain’s death, a Twitter user posted a tweet that included a doctored photo of Sen. McCain’s daughter, Meghan McCain, crying over her father’s casket. The tweet included the words “America, this ones (sic) for you” and the doctored photo, which showed a handgun being aimed at the grieving McCain. McCain’s husband, Federalist publisher Ben Domenech, criticized Twitter CEO Jack Dorsey for keeping the tweet on the platform. Twitter later took the offensive tweet down, and Dorsey apologized for not taking action sooner.

The tweet aimed at Meghan McCain clearly violated Twitter’s rules, which state: “You may not make specific threats of violence or wish for the serious physical harm, death, or disease of an individual or group of people.”

Twitter’s rules also prohibit hateful conduct or imagery, as outlined in its “Hateful Conduct Policy.” The policy seems clear enough, but a look at Kelly’s tweets reveals content that someone could interpret as hateful, even if some of the tweets are attempts at humor. Is portraying Confederate soldiers as “poor Southerners defending their land from an invading Northern army” hateful? What about a tweet bemoaning women’s right to vote? Or tweets that describe our ham-loving neighbors to the North as “garbage people” and violence as “underrated”? None of these tweets seem to violate Twitter’s current content policy, but someone could write a content policy that would prohibit such content.

Tough Calls

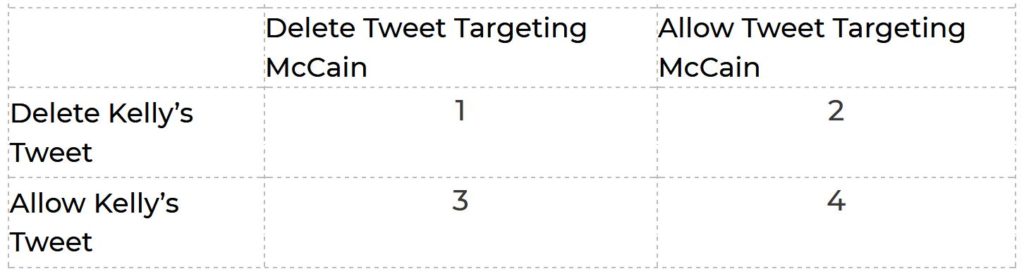

Imagine you are developing a content policy for a social media site, and your job is to consider whether content identical to the tweet targeting McCain and content identical to Kelly’s tweet concerning violence should be allowed or deleted. You have four policy options:

Many commentators seem to back option 3, believing the tweet targeting McCain should’ve been deleted while Kelly’s tweet should be allowed. That’s a reasonable position. But it’s not hard to see how someone could come to the conclusion that 1 and 4 are also acceptable options. Of all four options, only option 2, which would lead to the deletion of Kelly’s tweet but also allow the tweet targeting McCain, seems incoherent on its face.

Social media companies can come up with sensible-sounding policies, but there will always be tough calls. Having a policy that prohibits images of nude children sounds sensible, but there was an outcry after Facebook removed an Anne Frank Center article that had as its feature image a photo of nude children who were victims of the Holocaust. Facebook didn’t disclose whether an algorithm or a human being had flagged the post for deletion.

In a similar case, Facebook initially defended its decision to remove Nick Ut’s Pulitzer Prize-winning photo “The Terror of War,” which shows a burned, naked nine-year-old Vietnamese girl fleeing the aftermath of a South Vietnamese napalm attack in 1972. Despite the photo’s fame and historical significance, Facebook told The Guardian, “While we recognize that this photo is iconic, it’s difficult to create a distinction between allowing a photograph of a nude child in one instance and not others.” Facebook eventually changed course and allowed users to post the photo, citing the photo’s historical significance:

Because of its status as an iconic image of historical importance, the value of permitting sharing outweighs the value of protecting the community by removal, so we have decided to reinstate the image on Facebook where we are aware it has been removed.

What about graphic images of contemporary and past battles? On the one hand, there is clear historic value to images from the American Civil War, the Second World War, and the Vietnam War, some of which include graphic violent content. A social media company implementing a policy prohibiting graphic depictions of violence sounds sensible, but like a policy banning images of nude children, it will not eliminate difficult choices or the possibility that such a policy will yield results many users will find inconsistent and confusing.

Given that whoever is developing content moderation policies will be put in the position of making tough choices, it’s far better to leave these choices in the hands of private actors rather than government regulators. Unlike the government, Twitter has a profit motive and competition. As such, it is subject to far more accountability than the government. We may not always like the decisions social media companies make, but private failure is better than government failure. An America where unnamed bureaucrats, not private employees, determine what can be posted on social media is one where free speech is stifled.

Misguided Calls for Government Regulation

To be clear, the call for increased government intervention and regulation of social media platforms is a bipartisan phenomenon. Sen. Mark Warner (D-VA) has discussed a range of possible social media policies, including a crackdown on anonymous accounts and regulations modeled on the European so-called “right to be forgotten.” If such policies were implemented (First Amendment issues notwithstanding), they would inevitably lead to valuable speech being stifled. Sen. Ron Wyden (D-OR) has said he’s open to carve-outs of Section 230 of the Communications Decency Act, which protects online intermediaries such as Facebook and Twitter from liability for what users post on their platforms.

When it comes to possibly amending Section 230 Sen. Wyden has some Republican allies. Never mind that some of these Republicans don’t seem to fully understand the relevant parts of Section 230.

That social media giants are under attack from the left and the right is not an argument for government intervention. Calls for Section 230 amendment or “anti-censorship” legislation are a serious risk to free speech. If Section 230 is amended to increase social media companies’ risk of liability suits, we should expect these companies to suppress more speech. Twitter users may not always like what Twitter does, but calls for government intervention are not the remedy.

Be the first to comment on "Keep Government Away From Twitter"